Using Differential Privacy to Protect Employee Analytics Platforms

Using Differential Privacy to Protect Employee Analytics Platforms

In today’s data-driven workplace, HR and operations teams increasingly rely on employee analytics platforms to monitor productivity, engagement, attrition risk, and more.

But behind every metric is a person—and mishandling personal data can lead to trust erosion, legal penalties, or internal backlash.

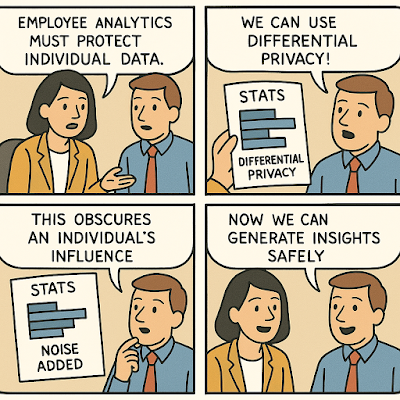

Differential privacy offers a powerful solution: a mathematical framework that adds statistical noise to data outputs, ensuring individual employees cannot be reverse-identified—even in granular dashboards.

📌 Table of Contents

- What Is Differential Privacy?

- Why It Matters in Employee Analytics

- How It Works in Practice

- Tools, Platforms, and Use Cases

- Conclusion

🔐 What Is Differential Privacy?

Differential privacy is a technique that introduces controlled randomness into data queries, making it statistically impossible to determine whether any individual’s data is included in a dataset.

It is quantified using a privacy loss parameter called epsilon (ε)—the lower the value, the greater the privacy.

This concept is widely used by tech giants like Apple and Google in data collection, and it's gaining traction in enterprise analytics.

👥 Why It Matters in Employee Analytics

Employee analytics systems gather sensitive data such as:

Performance metrics

Login patterns and tool usage

Survey responses and well-being indicators

Even when anonymized, cross-referencing attributes (like department + location + job title) can re-identify individuals.

Differential privacy ensures insights can be shared across management without compromising employee confidentiality.

🧮 How It Works in Practice

To implement differential privacy, analytics platforms:

Group employees into cohorts or aggregates (e.g., “sales team, Boston office”)

Apply noise to output metrics (e.g., ±1.5 hours to average working time)

Suppress low-count results (e.g., cohorts with <5 people)

Log all queries to ensure no repeated querying reduces privacy

Advanced systems may also allow “privacy budgets” per HR user to track access impact.

🛠️ Tools, Platforms, and Use Cases

OpenDP: Harvard-led open-source library for differential privacy

Google’s Privacy on Beam: Integrates differential privacy into Apache Beam pipelines

LeapYear: Offers enterprise-grade differential privacy for workforce and financial data

Worklytics: Applies DP to productivity and collaboration insights in Google Workspace environments

Use cases:

HR dashboards showing burnout indicators while maintaining individual anonymity

DEI (Diversity, Equity, Inclusion) metrics without revealing minority respondent identities

Productivity trends visualized at team or regional level—not by individual

💡 Conclusion

Employee analytics doesn’t have to be invasive to be insightful.

With differential privacy, organizations can unlock trends, boost performance, and track engagement—all without sacrificing individual trust or compliance with privacy laws.

It's not just a tech upgrade—it's a cultural contract between analytics and ethics.

🔗 Related Resources

Keywords: differential privacy, employee analytics, HR data protection, privacy-preserving statistics, workplace metrics security